The way to Locate Reliable Proxy Servers for Online Scraping

In the field of web scraping, having trustworthy proxies is a essential element for facilitating successful data extraction. These proxies can help protect your identity, avoid throttling by servers, and enable you to access restricted data. However, finding the right proxy solutions can often seem overwhelming, with countless options available online. This guide is created to help you explore the complicated landscape of proxies and identify the most effective ones for your web scraping needs.

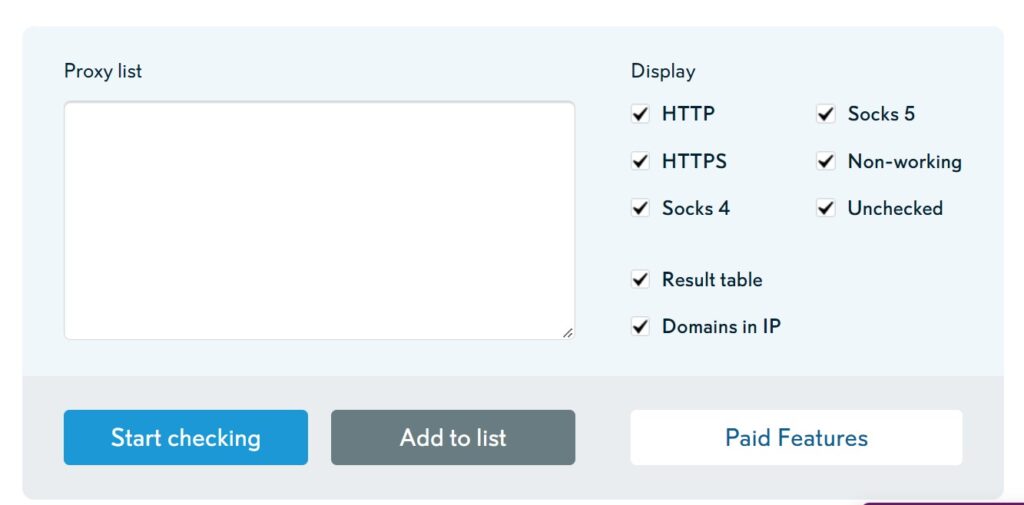

When searching for proxies, you'll encounter terms like proxy scrapers, proxy checkers, and proxy lists. These tools are vital in sourcing high-quality proxies and guaranteeing they perform optimally for your specific tasks. Whether you are in search of free options or evaluating paid services, understanding how to assess proxy speed, anonymity, and reliability will significantly enhance your web scraping projects. Join us as we delve into the best practices for finding and utilizing proxies efficiently, ensuring your data scraping endeavors are both efficient and successful.

Understanding Proxy Solutions for Web Data Harvesting

Proxy servers play a crucial role in data harvesting by serving as go-betweens between the scraper and the destination sites. When you employ a proxy, your inquiries to a website come from the IP of the proxy instead of your personal. This helps to hide your true IP and prevents the website from restricting you due to unusual request patterns. By leveraging proxies, scrapers can access data without revealing their original IPs, allowing for more extensive and efficient scraping.

Multiple forms of proxies serve various functions in web scraping. HTTP-based proxies are suitable for regular web traffic, while SOCKS proxies can handle a larger variety of traffic types including TCP, UDP, and other protocols. The selection between employing HTTP or SOCKS proxies depends on the specific needs of your web scraping task, such as the type of protocols involved and the level of anonymity required. Additionally, proxies can be categorized as public vs private, with private proxies generally offering better speed and consistency for scraping jobs.

To ensure successful data extraction, it is important to find high-quality proxies. Good proxies not only maintain quick response times but also provide a level of anonymity that can protect your scraping efforts. Using a proxy testing service can help you evaluate the performance and security of the proxies you intend to use. Ultimately, comprehending how to effectively use proxies can significantly enhance your web scraping activities, improving both performance and success levels.

Types of Proxies: HTTP

In the realm of web scraping, understanding the diverse types of proxies is essential for effective data collection. HTTP proxies are crafted for managing web traffic and are commonly used for visiting websites or gathering web content. They function effectively for standard HTTP requests and can be useful in web scraping when engaging with websites. Nonetheless, they may face challenges with handling non-HTTP traffic.

SOCKS4 proxies provide a more flexible option for web scraping, as they can handle any type of traffic, whether it is TCP or UDP. This adaptability allows users to bypass restrictions and access various online resources. Nonetheless, SOCKS4 does not support login verification or manage IPv6 traffic, which may limit its applicability in certain situations.

The SOCKS5 proxy is the most sophisticated type, offering improved security and performance. It manages both TCP and UDP, supports authentication, and can handle IPv6 addresses. This makes SOCKS5 proxies an outstanding choice for web scraping, particularly when confidentiality and secrecy are a concern. Their capability to tunnel various types of data adds to their ability in fetching data from various sources protected.

Finding Dependable Proxy Providers

When seeking dependable proxy server sources for web scraping, it's important to start with reputable providers that have a proven track record. Numerous individuals opt for premium services which offer exclusive proxies, that tend to be more reliable and faster compared to free alternatives. These providers often have a positive standing for availability and support, ensuring that users receive reliable performance. Investigating customer testimonials and community recommendations can guide locate trustworthy options.

A different approach is to leveraging lists of proxies and tools which scrape proxies across various websites. While there are the quality can differ significantly. To make private vs public proxies that proxies are reliable, think about using a tool to verify proxies to check their performance and privacy. Concentrate on sources which frequently refresh their offers, as this can help you access up-to-date and active proxies, that is vital for maintaining efficiency in web scraping projects.

Lastly, it's important to separate between HTTP and SOCKS proxies. Hypertext Transfer Protocol proxies are typically used for web browsing, whereas SOCKS proxies are versatile and can manage multiple types of data. If web scraping activities require advanced capabilities, considering a SOCKS proxy may be advantageous. Additionally, knowing the distinction between free and paid proxies can inform your decision. Public proxies are usually no-cost but can be less secure and less efficient; whereas private proxies, while paid, offer superior performance and security for web scraping needs.

Proxy Extractors: Utilities and Techniques

Regarding web scraping, having the right tools is crucial for effectively acquiring data across the internet. Proxy scrapers are specifically designed to simplify the process of compiling proxy lists from diverse sources. These tools can reduce time and guarantee you have a wide array of proxies available for your scraping tasks. Using a no-cost proxy scraper can be an excellent way to begin, but it's vital to assess the quality and reliability of the proxies collected.

For those looking to ensure the success of their scraping activities, employing a quick proxy scraper that can swiftly verify the effectiveness of proxies is essential. Tools like ProxyStorm offer advanced features that help users sort proxies based on parameters such as quickness, disguise level, and type (HTTP or SOCKS). By using these types of proxy checkers, scrapers can simplify much of the evaluation, allowing for a more streamlined data extraction method.

Understanding the variation between HTTP, SOCKS4, and SOCKS5 proxies is also necessary. Each type serves varied purposes, with SOCKS5 offering more flexibility and supporting a wider range of protocols. When picking tools for proxy scraping, consider utilizing a proxy verification tool that helps evaluate proxy speed and anonymity. This guarantees that the proxies you use are not only working but also fit for your specific web scraping needs, whether you are working on SEO tasks or mechanized data extraction projects.

Assessing and Validating Proxy Server Privacy

When using proxy servers for web scraping, it is important to verify that your proxies are genuinely anonymous. Evaluating proxy privacy entails checking whether or not your real IP address is revealed while using the proxy. This can be done through online services designed to disclose your current IP address prior to and after connecting through the proxy. A reliable anonymous proxy should conceal your original IP, displaying only the proxy's IP address to any external sites you visit.

To verify the level of privacy, you can categorize proxies into three types: clear, anonymous, and high anonymity. Transparent proxies do not conceal your IP and can be readily identified, while anonymous proxies offer some level of IP concealment but may still identify themselves as proxies. High anonymity proxies, on the other hand, offer complete privacy, appearing as if they are regular users. Using a proxy checker tool lets you to figure out which type of proxy you are working with and to make sure you are employing the best option for protected scraping.

Regularly testing and checking the privacy of your proxy servers not only safeguards your personal information but also boosts the performance of your web scraping efforts. Utilities such as Proxy Tool or specialized proxy validation tools can facilitate this process, saving you time and confirming that you are always employing high-quality proxies. Take advantage of these resources to maintain a robust and untraceable web scraping operation.

Free Proxies: What to Select?

When it comes to selecting proxies for web scraping, one of the key decisions you'll face is whether to use free or premium proxies. Complimentary proxies are readily available and budget-friendly, making them an attractive option for those on a tight budget or occasional scrapers. However, the dependability and speed of free proxies can be unreliable. They often come with drawbacks such as lagging connections, less anonymity, and a higher likelihood of being blocked by target websites, which can hinder the effectiveness of your extraction efforts.

On the flip side, paid proxies generally provide a more dependable and secure solution. They often come with features like exclusive IP addresses, enhanced speed, and superior anonymity. Premium proxy services frequently offer reliable customer support, aiding you troubleshoot any issues that arise during your scraping tasks. Moreover, these proxies are not as likely to be blacklisted, ensuring a smoother and more efficient scraping process, especially for bigger and more complex projects.

Ultimately, the decision between complimentary and paid proxies will depend on your specific needs. If you are conducting limited scraping and can tolerate some downtime or reduced speeds, complimentary proxies may suffice. However, if your scraping operations require speed, reliability, and anonymity, investing in a premium proxy service could be the optimal option for achieving your objectives successfully.

Recommended Practices for Using Proxies in Automation

When deploying proxies for automated processes, it's essential to change them consistently to minimize detection risks and restrictions by target websites. Implementing a proxy rotation strategy can aid spread out queries across various proxies, reducing the chances of reaching request limits or triggering security mechanisms. You can achieve this by utilizing a proxy index generator on the internet that provides a mixed set of proxy options or including a speedy proxy collector that can retrieve new proxies frequently.

Evaluating the dependability and speed of your proxies is just as important. Use a proxy verifier to test their performance before you start data extraction. Utilities that give detailed data on latency and response rates will enable you to identify the most efficient proxies for your automation. Additionally, it's important to oversee your proxies continuously. A proxy testing tool should be part of your process to guarantee you are always using top-notch proxies that continue functional.

Finally, know the distinctions between private and shared proxies when establishing your automation. Although public proxies can be inexpensive, they often lack the trustworthiness and safety of exclusive options. If your tasks require reliable and protected connections, investing in private proxies may be advantageous. Evaluate your individual needs on proxy privacy and performance to select the optimal proxies for data harvesting and ensure your operations run seamlessly.